Imaginative Walks: Generative Random Walk Deviation Loss for Improved Unseen Learning Representation

Under Review

- Divyansh Jha*

- Kai Yi*

- Ivan Skorokhodov

- Mohamed Elhoseiny King Abdullah University of Science and Technology (KAUST)

Abstract

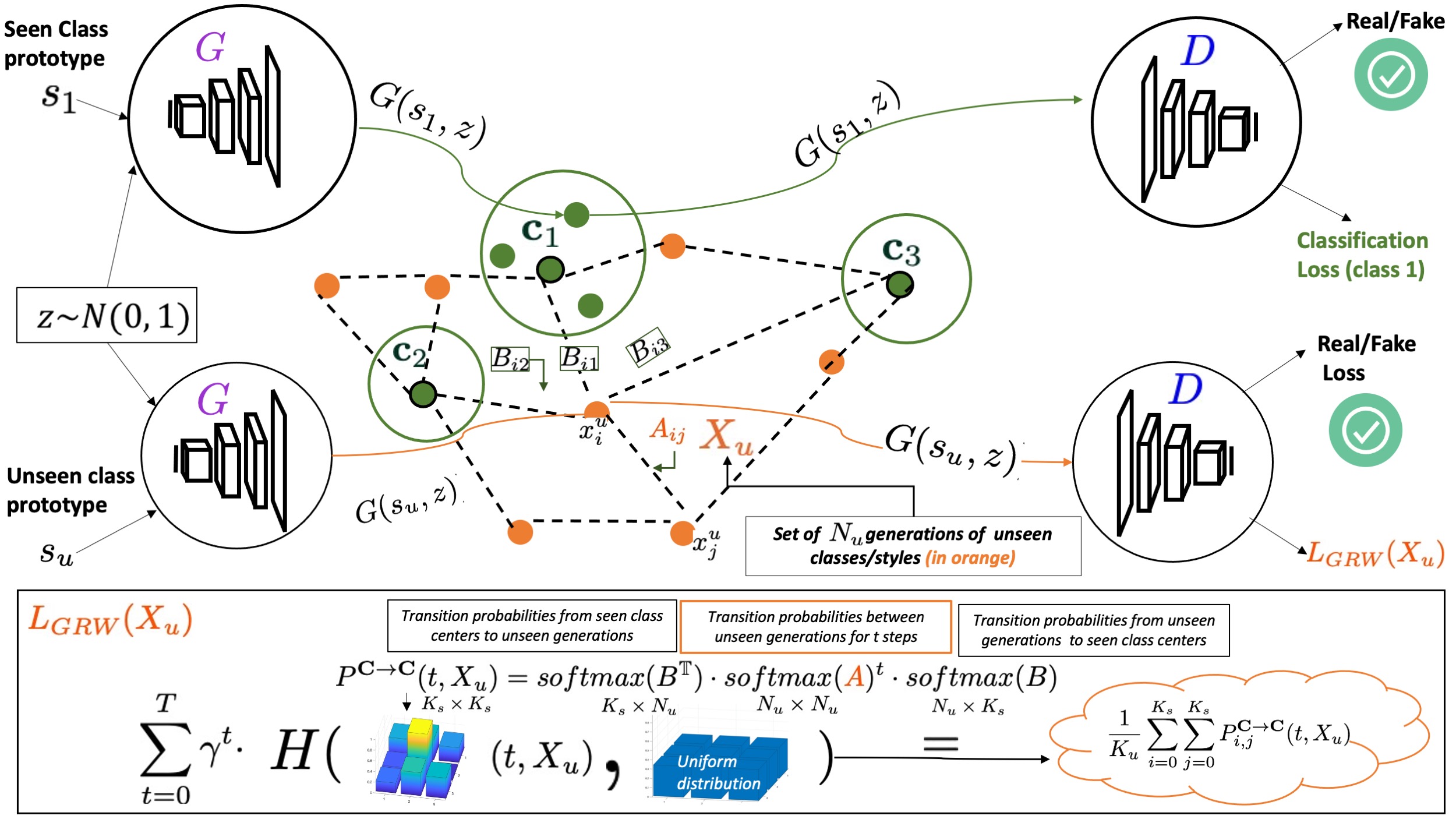

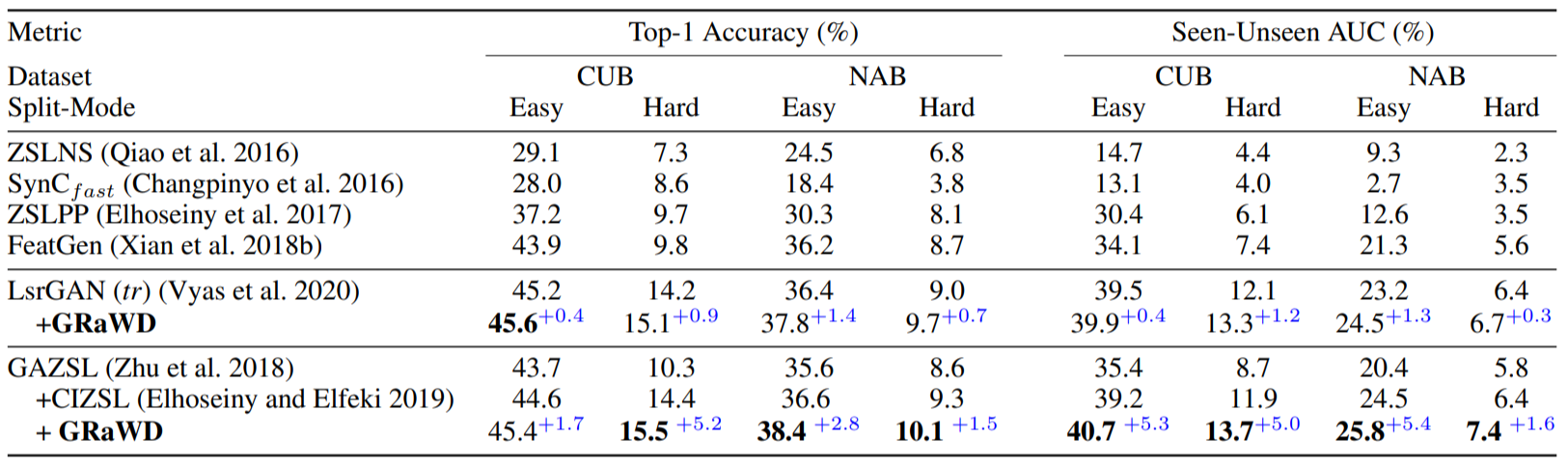

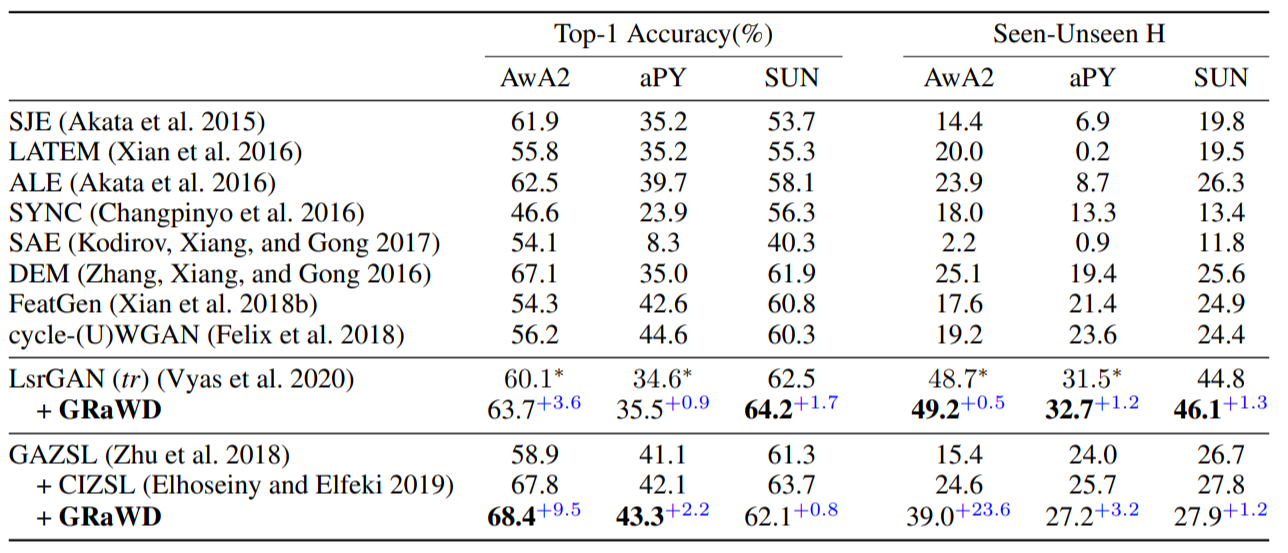

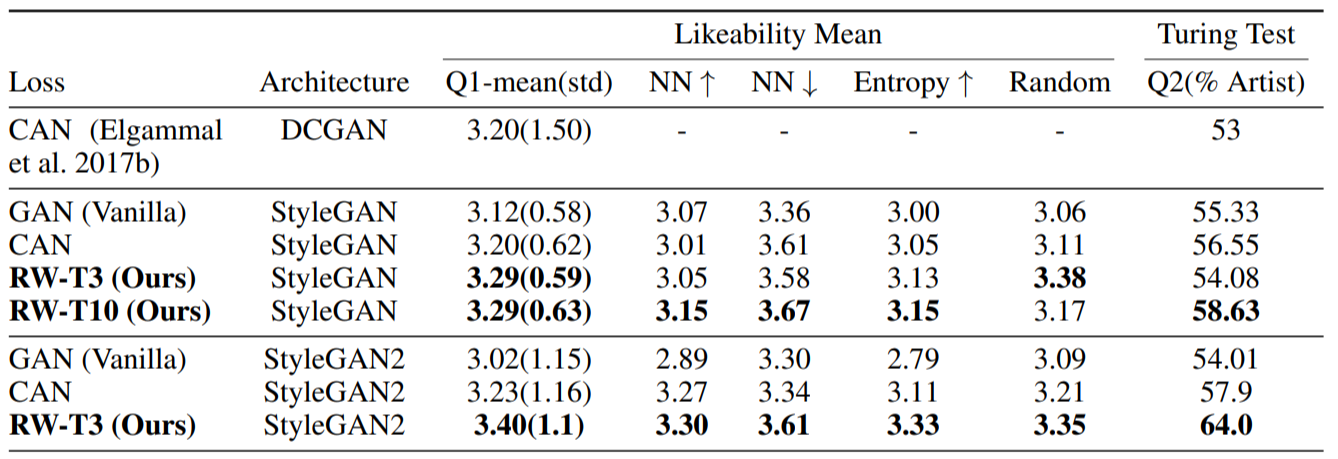

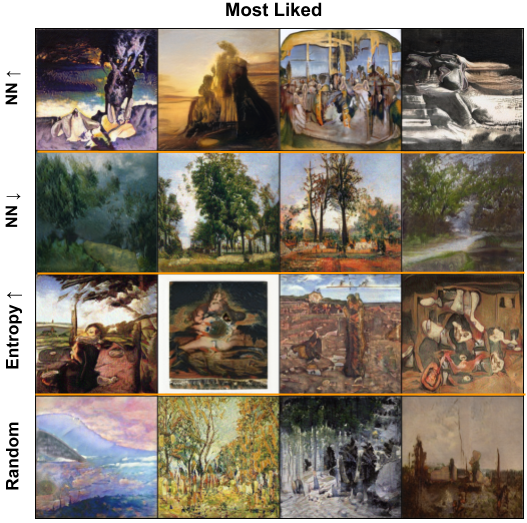

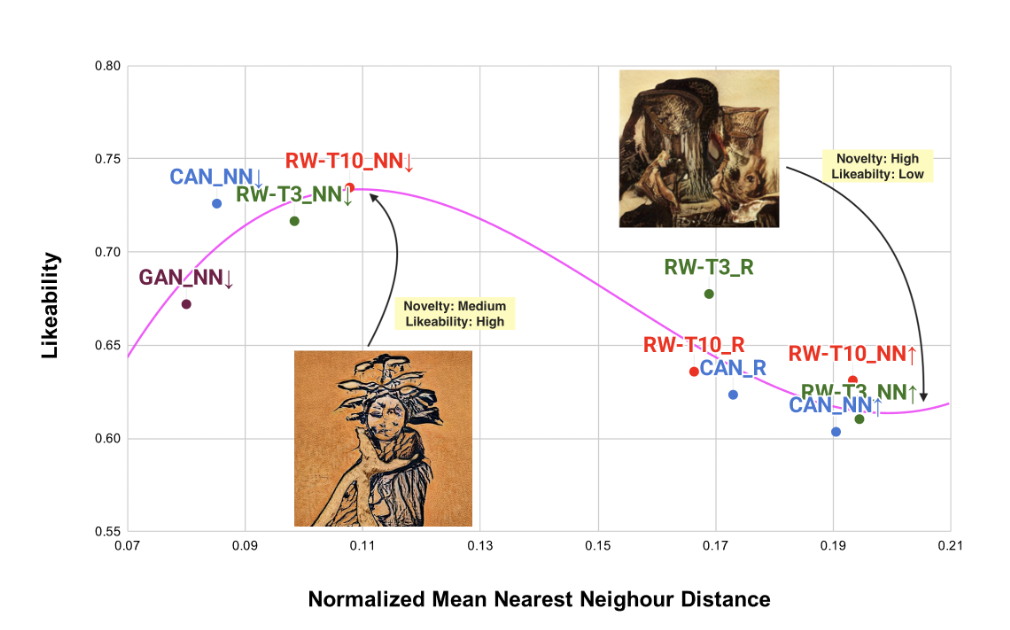

We propose a novel loss for generative models, dubbed as GRaWD (Generative Random Walk Deviation), to improve learning representations of unexplored visual spaces. Quality learning representation of unseen classes (or styles) is critical to facilitate novel image generation and better generative understanding of unseen visual classes, i.e., zero-shot learning (ZSL). By generating representations of unseen classes based on their semantic descriptions, e.g., attributes or text, generative ZSL attempts to differentiate unseen from seen categories. The proposed GRaWD loss is defined by constructing a dynamic graph that includes the seen class/style centers and generated samples in the current minibatch. Our loss initiates a random walk probability from each center through visual generations produced from hallucinated unseen classes. As a deviation signal, we encourage the random walk to eventually land after $t$ steps in a feature representation that is difficult to classify as any of the seen classes. We demonstrate that the proposed loss can improve unseen class representation quality inductively on text-based ZSL benchmarks on CUB and NABirds datasets and attribute-based ZSL benchmarks on AWA2, SUN, and aPY datasets. In addition, we investigate the ability of the proposed loss to generate meaningful novel visual art on the WikiArt dataset. The results of experiments and human evaluations demonstrate that the proposed GRaWD loss can improve StyleGAN1 and StyleGAN2 generation quality and create novel art that is significantly more preferable. Our code is made publicly available at https://github.com/Vision-CAIR/GRaWD.

Video

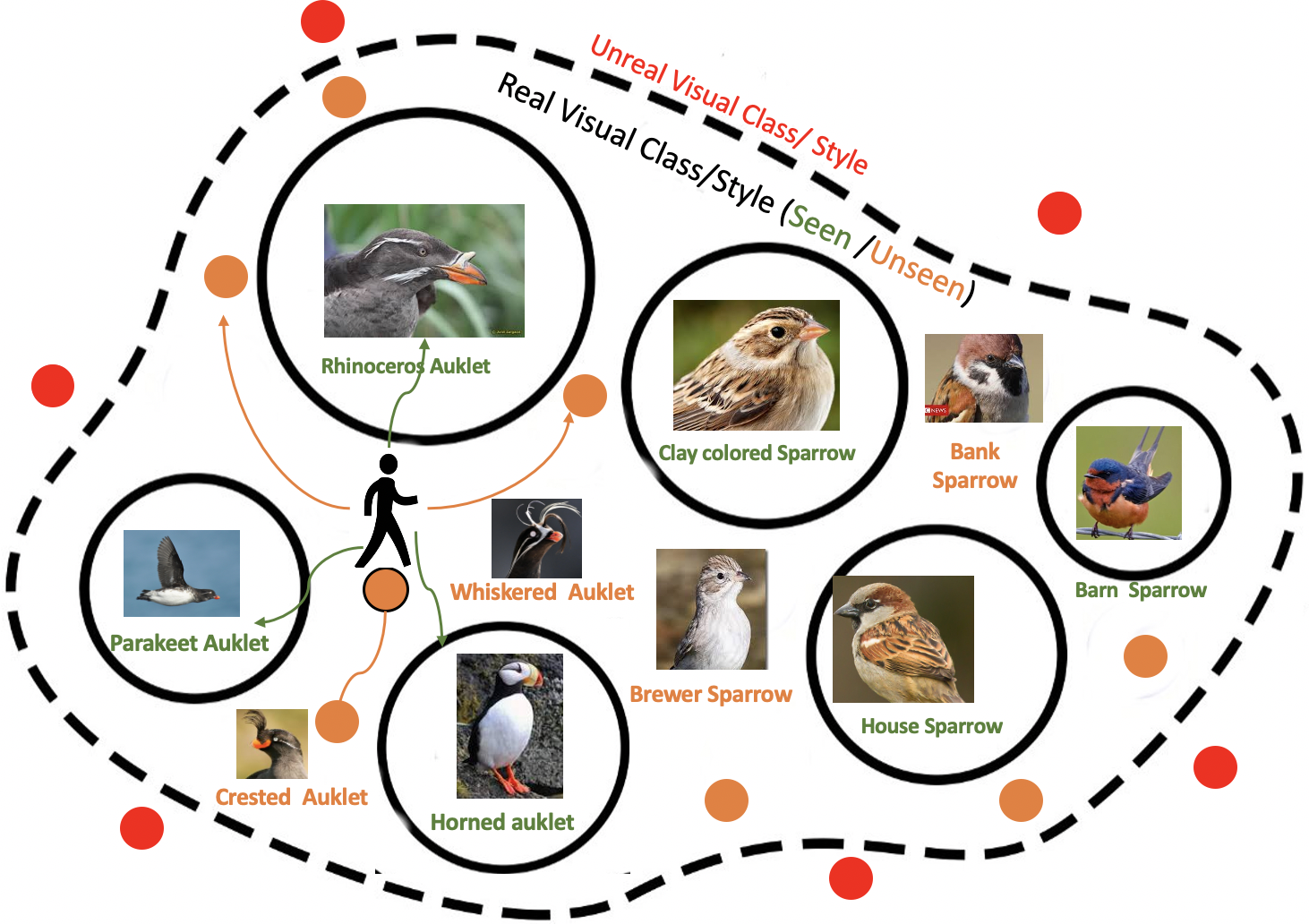

Motivation

Method: GRaWD

Quantative Results in ZSL

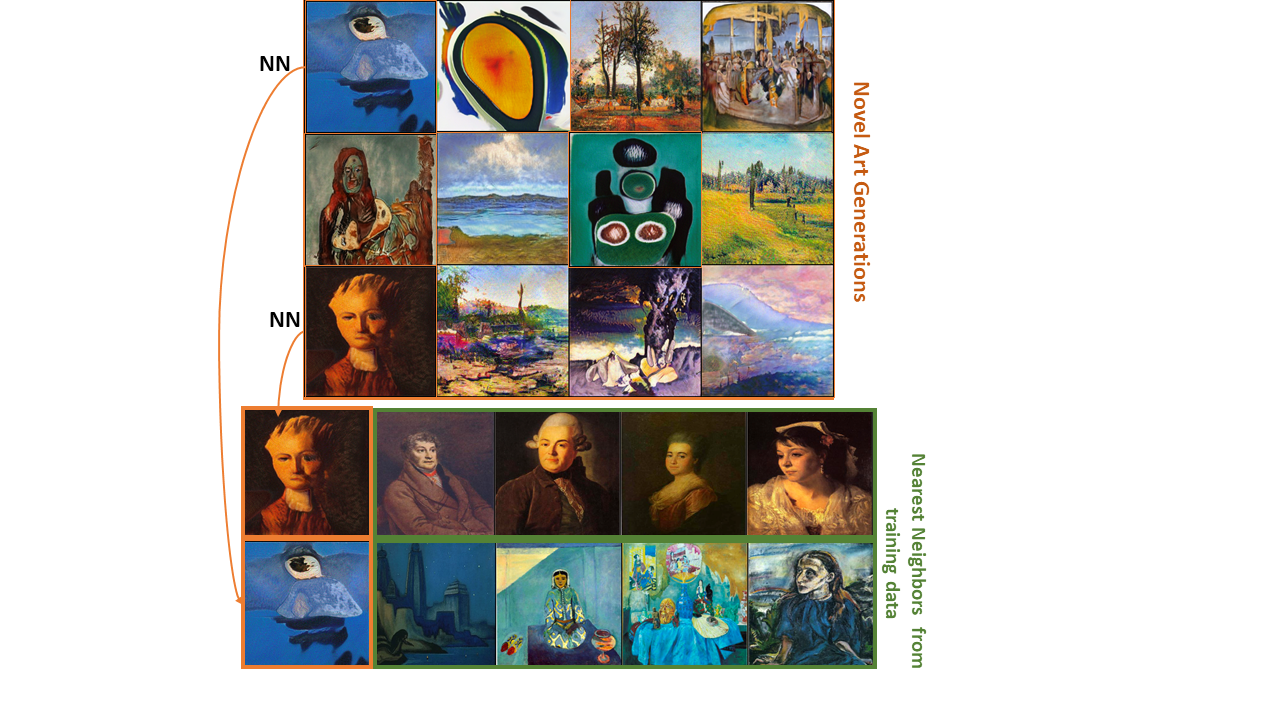

Results in Art generation

Citation

If you find our work useful in your research, please consider citing:

@article{elhoseiny2021imaginative,

title={Imaginative Walks: Generative Random Walk Deviation Loss for Improved Unseen Learning Representation},

author={Elhoseiny, Mohamed and Jha, Divyansh and Yi, Kai and Skorokhodov, Ivan},

journal={arXiv preprint arXiv:2104.09757},

year={2021}

}